RESEARCH

The DCE group at University of Exeter does leading research at the dynamic interface between applied mathematics, statistics and high-performance computing. Spanning all aspects of research from fundamental theory in data science and AI to applied industrial focused projects, the group works with major international companies, research centres and university partners.

1628858350000

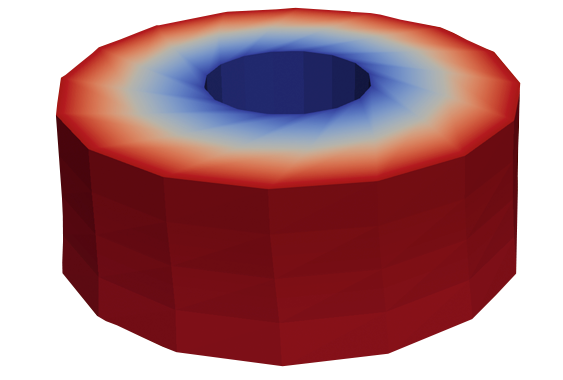

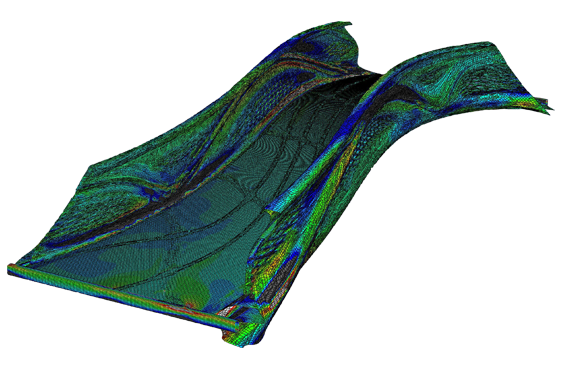

Modelling Multiphase, Multiscale Resin Flow in Composites

Start Date 14/08/17 - Finish Date 06/05/22 Developing a Computer Model to Simulate the Forming of Composites During Infusion Manufacture In infusion manufacture, optimising the cure cycle time is a complex challenge, as too quick a cure can lead to porosity, whereas a slower cure reduces output and increases cost. The aim of this project is to create a computational model to simulate the flow and cure of liquid resin within a composite fibre bed. This will allow the user to alter parameters to optimise the cure process, without the fabrication of many costly prototypes. Numerous factors affect the infusion and cure processes, so this computational model needs to take many parameters into account, including: Resin flow rateLocal temperaturesTimeCure rateResin viscosityDisplacementFibre direction To provide a complete picture, the model needs to encompass the infusion stage of manufacture, as this is when voids can occur in the resin. This requires tracking the fluid front in a two-phase fluid simulation (i.e. the air being expelled and the liquid resin being introduced). Recent Updates A three-dimensional, linear model has been created in Matlab, encompassing fibre compaction, fluid pressure, temperature and resin cure. A non-linear model of fibre compaction has now been created using FEniCS, to which will be added the fluid behaviour of the resin. Collaborators Professor Tim Dodwell, Academic Principle Investigator, https://emps.exeter.ac.uk/engineering/staff/td336

1620412169000

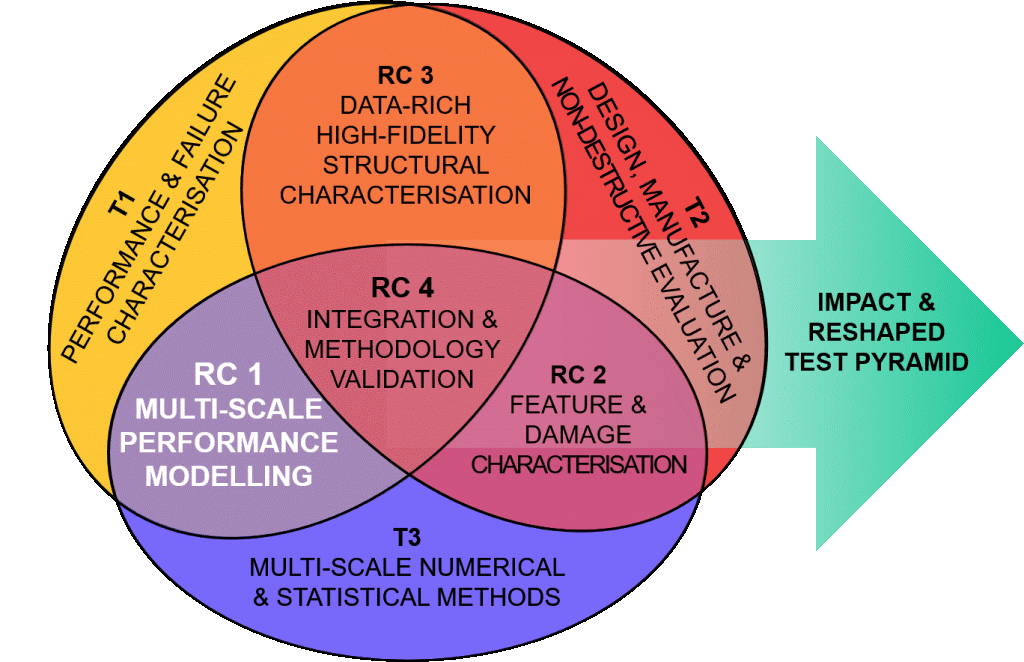

Certification for Design Reshaping the Testing Pyramid

Start Date 01/08/19 - Finish Date 31/07/24 Developing the scientific foundation that enables lighter, safer and more cost-efficient composite aerostructures. This research project is to provide a route for lessening regulatory constraints, moving towards a more cost/performance optimised philosophy by reducing the multiple coupon level materials tests at the bottom of the test pyramid. It will create the scientific basis for a new culture of virtual certification that will enable significant mass savings and target reduction of design costs and development time. The project is developing a new holistic approach to design at a hitherto unattainable level of fidelity. Bayesian techniques such as Monte-Carlo Markov Chains to quantify uncertainty and variability and Bayesian inference process will be used to minimise model uncertainty. Lock-In Digital Image Correlation and Thermoelastic Stress Analysis will be integrated and scaled up to provide a non-contact methodology to capture stress and strain in the vicinity of as-designed internal meso/micro features and in-service damage, and quantitatively identify the mechanisms that initiate damage and lead to failure. Recent Updates Recently, we have successfully built a Gaussian Process based Data-Driven Emulator for Quantifying the defects/uncertainty of Composite structure (C-section), The most significant merits of the proposed scheme include: 1) It directly maps from input wrinkle with different parameters to full solution field displacement without time-consuming stiffness matrix assembling and governing equation solving in each single computational prediction. 2) unlike other machine learning algorithms requiring a huge amount of training data, this emulator is capable to provide the predication (expected value) and, from a statistical aspect, the corresponding confidence interval (standard deviation) for the testing input uncertainty at the same time, even the number of training data is limited Collaborators Professor Tim Dodwell, Academic Principle Investigator, https://emps.exeter.ac.uk/engineering/staff/td336Professor Ole Thomsen, http://www.bristol.ac.uk/engineering/people/ole-t-thomsen/overview.htmlProfessor Janice Barton, http://www.bristol.ac.uk/engineering/people/janice-barton/index.htmlProfessor Ian Sinclair, https://www.southampton.ac.uk/engineering/about/staff/is1.pageProfessor Robert Smith, http://www.bristol.ac.uk/engineering/people/robert-a-smith/overview.html Professor Richard Butler, https://researchportal.bath.ac.uk/en/persons/richard-butler-2Professor Robert Scheichl, https://researchportal.bath.ac.uk/en/persons/robert-scheichlProfessor David Woods, https://www.southampton.ac.uk/maths/about/staff/davew.pageProfessor Stephen Hallett, http://www.bristol.ac.uk/engineering/people/stephen-r-hallett/Professor Kevin Potter, http://www.bristol.ac.uk/engineering/people/kevin-d-potter/Dr Karim Alejandro Anaya-Izquierdo, https://researchportal.bath.ac.uk/en/persons/karim-anaya-izquierdoDr Andrew Rhead, https://researchportal.bath.ac.uk/en/persons/andrew-rhead Partners

1604100131000

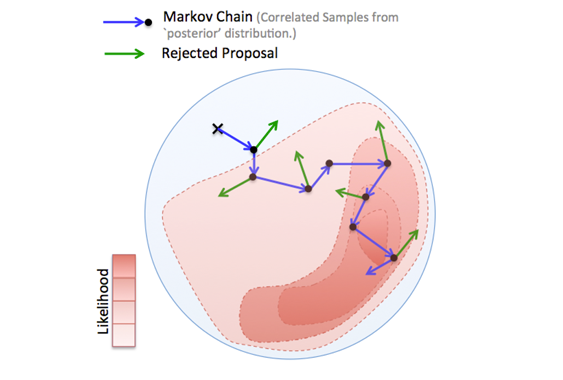

Adaptive multilevel MCMC sampling. Developing an MCMC algorithm for efficient Bayesian inference in multilevel models

Funding. Alan Turing institute Developing and implementing a new method called Multi-Level Delayed Acceptence (MLDA) to drastically accelerate inference in many real-world applications where spatial or temporal granularity can be adjusted to create multiple model levels. Sampling from a Bayesian posterior distribution using Markov Chain Markov Chain Monte Carlo (MCMC) methods (Fig. 1) can be a particularly challenging problem when the evaluation of the posterior is computationally expensive and the parameter space and data are high-dimensional. Many MCMC samples are required to obtain a sufficient representation of the posterior. Examples of such problems frequently occur in Bayesian inverse problems, image reconstruction and probabilistic machine learning, where calculating the likelihood depends on the evaluation of complex mathematical models (e.g. systems of partial differential equations) or large data sets. Fig.1 - An MCMC algorithm drawing samples from a 2D distribution. In each step a proposed sample is generated and it is either accepted (blue) or rejected (green). If rejected, the previous sample of the chain is replicated. Samples occur more frequently in areas of high probability. This project has developed and implemented an MCMC approach (MLDA) capable of accelerating existing sampling methods, where a hierarchy (or sequence) of computationally cheaper approximations to the 'full' posterior density are available. The idea is to use samples generated in one level as proposals for the level above. A subchain runs for a fixed number of iterations and then the last sample of the subchain is used as the proposal for the higher-level chain (Fig. 2). Fig .2 - Three-level MLDA: For each step of the fine chain, a coarse subchain is created with length 3. The last sample of the subchain is used as a proposal for the fine chain. The sampler is suitable for situations where a model is expensive to evaluate in high spatial or temporal resolution (e.g. realistic subsurface flow models). In those cases it is possible to use cheaper, lower resolutions as coarse models. If the approximations are sufficiently good, this leads to good quality proposals, high acceptance rates and high effective sample size compared to other methods.In addition to the above main idea, two enhancements to increase efficiency have been developed. The first one is an adaptive error model (AEM). If a coarse model is a poor approximation of the fine model, subsampling on the coarse chain will cause MLDA to diverge from the true posterior, leading to low acceptance rates and effective sample size. The AEM corrects coarse model proposals employing a Gaussian error model, which effectively offsets, scales and rotates the coarse level likelihood function (Fig. 3). In practice, it estimates the relative error (or bias) of the coarser model for each pair of adjacent model levels, and corrects the output of the coarser model according to the bias. Fig 3 - Adaptive Error Model (AEM): When the coarse likelihood function (red isolines) is a poor fit of the fine (blue contours), AEM offsets the coarse likelihood function (middle panel) using the mean of the bias, and scales and rotates it (right panel) using the covariance of the bias. The second feature is a new Variance Reduction technique, which allows the user to specify a Quantity of Interest (QoI) before sampling, and calculates this QoI for each step at every level, as well as differences of QoI between levels. The set of QoI and QoI differences can be used to reduce the variance of the final QoI estimator.

1600690424000

Digital twins for building control

Start Date 21/09/20 - Finish Date 20/09/24 Creating a digital twin of the LSI building with the aim to use real-time control and machine learning to reduce energy consumption. Building services, heating, cooling and ventilation, are necessary for a comfortable building but require a large amount of energy. There is potential to reduce the amount of energy needed by providing these services intelligently, i.e only when required, or when providing optimal benefit. This could be achieved by creating a digital twin of the building which utilises a thermal model of the building to monitor, predict and optimise the energy usage of building services. There are a number of challenges that must be completed for the project to succeed. A thermal model of the building must be made and tested, the role of this will be to predict the temperature in each room of the building. For this to work, various uncertain properties and behaviours of the building must be found and their uncertainty understood. For example, these will include material properties such as the thermal performance of walls and typical occupancy patterns. To do this historic thermal and weather data will be used to train the model and discover the probability distribution of uncertain components. Combined with the above a multi-objective optimisation system must be created so that for set comfort criteria, for example, heated rooms must not drop below 21°C, a solution can be found which minimises the total building energy usage. Finally, this must all be done in real time with live data, a solution must be found and recalculated at intervals depending if the building performs as the model expected. Recent Updates A lumped parameter thermal model of one floor of the LSI building has been initialised. Research Software Engineers Omar Jamil and Freddy Wordingham are creating methods to collect data from the LSI’s building management system, this will be used to quantify building model parameters. Collaborators Professor Tim Dodwell, Academic Principle Investigator, https://emps.exeter.ac.uk/engineering/staff/td336Professor Jonathan Fieldsend, Co-Investigator, http://emps.exeter.ac.uk/computer-science/staff/jefields

1597320000000

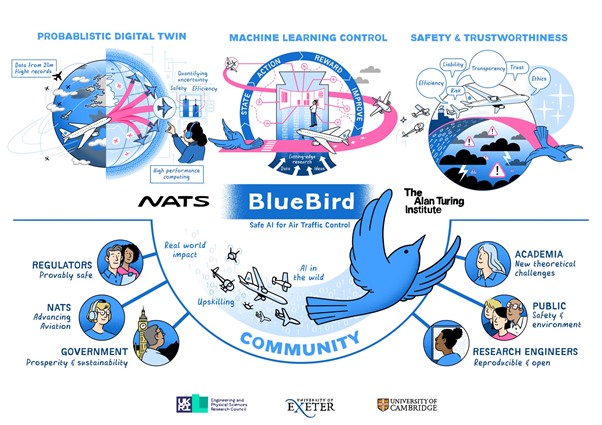

Project Bluebird: An AI system for air traffic control

Start Date 01/07/21 - Finish Date 30/06/26 Funding. EPSRC Prosperity Partnership 5 Year, 13.75M Advancing probabilistic machine learning to deliver safer, more efficient, and predictable air traffic control Air traffic control (ATC) is a remarkably complex task. In the UK alone, air traffic controllers handle as many as 8,000 planes per day, issuing instructions to keep aircraft safely separated. Although the aviation industry has been hit by the pandemic, European air traffic is forecast to return to pre-pandemic levels within five years. In the long term, rising passenger numbers and the proliferation of uncrewed aircraft will mean that UK airspace is busier than ever, so next-generation ATC systems are needed to choreograph plane movements as efficiently as possible, keeping our skies safe while reducing fuel burn.The project has three main research themes: Develop a probabilistic digital twin of UK airspace. This real-time, physics-based computer model will predict future flight trajectories and their likelihoods – essential information for decision-making. It will be trained on a NATS dataset of at least 10 million flight records, and will take into account the many uncertainties in ATC, such as weather, or aircraft performance.Build a machine learning system that collaborates with humans to control UK airspace. Unlike current human-centric approaches, this system will simultaneously focus on both the immediate, high-risk detection of potential aircraft conflicts, and the lower risk strategic planning of the entire airspace, thus increasing the efficiency of ATC decision-making. To achieve this, researchers will develop algorithms that use the latest machine learning techniques, such as reinforcement learning, to optimise aircraft paths.Design methods and tools that promote safe, explainable and trustworthy use of AI in air traffic control systems. This will involve experiments with controllers to understand how they make decisions, so that these behaviours can be taught to AI systems. The project will also explore ethical questions such as where the responsibility lies if a human-AI system makes a mistake, how to build a system that is trusted by humans, and how to balance the need for both safety and efficiency. Recent updates We will be recruiting for additional team members as the project progresses, and will link to the opportunities below as they arise. Research Associate, Probabilistic Machine Learning x2 closes 5 September 23:59Research Associate, Machine Learning Control closes 5 September 23:59Exeter / Turing Research Fellow in Machine Learning Control closes 5 September 23:59Exeter / Turing Research Fellow in Human-Computer Interaction closes 5 September 23:59Exeter / Turing Research Fellow in Probabilistic Machine Learning closes 5 September 23:59 Collaborators Professor Mark Girolami, https://www.turing.ac.uk/people/researchers/mark-girolamiProfessor Richard Everson, https://emps.exeter.ac.uk/computer-science/staff/reversonDr. Adrian Weller, https://www.turing.ac.uk/people/researchers/adrian-wellerDr. Edmond Awad,https://business-school.exeter.ac.uk/about/people/profile/index.php?web_id=Edmond_Awad Dr. Evelina Gabasova, https://www.turing.ac.uk/people/researchers/evelina-gabasova

1594900800000

Intelligent Virtual Test Pyramids for High Value Manufacturing

Start Date 01/10/19 - Finish Date 30/09/24 Funding. UKRI 5 Year Turing AI Fellowship ∼£2.6M Developing novel AI methods to build a more sustainable aviation industry There is a paradox in aerospace manufacturing. The aim is to design an aircraft that has a very small probability of failing. Yet to remain commercially viable, a manufacturer can afford only a few tests of the fully assembled plane. How can engineers confidently predict the predict the failure of a low-probability event? This research is developing novel, unified AI methods to intelligently fuses models and data, enabling industry to slash conservatism in engineering design, leading to faster, lighter, more sustainable aircraft. The engineering solution to building a safe aircraft is the test pyramid. Variability in structural performance is quantified from thousands of small tests of the material (a coupon or element test), hundreds of tests at the intermediate length scales (called details components) and a handful of tests of the full system. Sequentially, the lower length scales derive ‘design allowables’ for tests at the higher levels. Yet, such derivation is accompanied by significant uncertainty. In practice, this uncertainty means that ad hoc ‘engineering safety factors’ have to be applied at all length scales. As we seek a more sustainable aviation industry, the compound effect of these safety factors leads to significant overdesign of structures. The overdesign results in extra weight, which severely limits the efficiency of modern aircraft. A clear route to flying lighter, more efficient aircraft is to better quantify the uncertainties inherent in the engineering test pyramid, which will allow the industry to confidently trim excessive safety factors. New high-fidelity simulation capabilities in composites now mean that mathematical models can supplement limited experimental data at the higher length scales. Yet, with this comes additional uncertainties from the models themselves. Existing experimental tests, particularly at the lower length scales, can be used to reduce and quantify uncertainty in the models. The typical typical statistical approach is Bayesian, in which the distribution of a model?s parameters is learned to fit the data probabilistically. However, existing capabilities are notoriously computationally expensive, often limited to small-scale applications and simplified experimental data sets. For real engineering test pyramids, the available data and models are more heterogeneous and their connections are are complex, driving the need for new fundamental research. This research will develop a novel, unified AI framework that intelligently fuses models and data at all levels of the engineering test pyramid, remains computationally feasible and is sufficiently robust to support ethical engineering decision-making in order to slash conservatism in engineering design, leading to faster, lighter, more sustainable aircraft. Recent Updates Michael Gibson and Ben Fourcin, started 6 July 2020.With City Science we have reviewed available modelling software for engineering consumption, and have down selected “EnergyPlus”.Obtain building information data for Living Systems Institute (LSI) Collaborators Professor Tim Dodwell, Academic Principle Investigator, https://emps.exeter.ac.uk/engineering/staff/td336Professor Mark Girolami, http://www.eng.cam.ac.uk/profiles/mag92Professor Philip Withers, https://www.royce.ac.uk/about-us/professor-phil-withers/ Partners

1594814400000

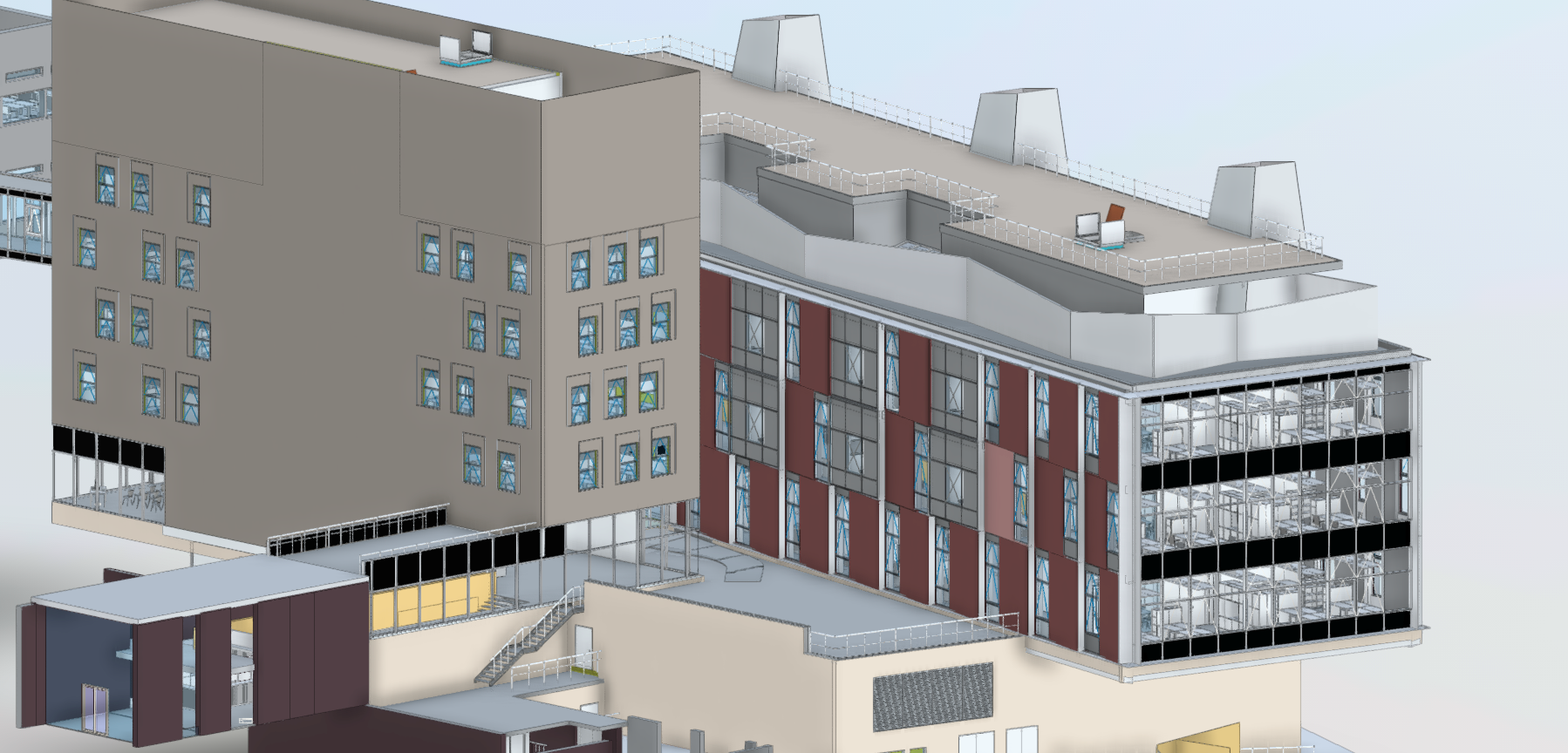

From RIBA to Reality - Deep Digital Twin to enable Human - Centric Buildings for a Carbon Neutral Future

Start Date 01/04/20 - Finish Date 31/06/22 Funding. Innovate UK - Transforming Construction Call $1.1M Developing novel AI methods to track human usage of a building using mobile to WiFi connections. This will enable adaptive control of energy consumption in the building uniquely tailored to it's use. We'd like to encourage all Exeter staff and students to take part in the project by clicking the above Google Play button to download the Exeter Locate app to help drive this innovative research forward. "RIBA to Reality" is a transformative industrial research project to support the RIBA design process right through to delivery and operations of the next generation of Carbon Neutral Buildings. Using digital twinning technology the programme will deliver a holistic approach to proactive energy control, by elevating Building Information Models (BIM) so it is capable of tracking live or simulating both human building usage and energy demands. The project is developing new technology to track human building useage using mobile-to-wi connections. Bayesian calibration techniques will the use this discrete data to build a live `heat map' of building useage. This will use the latest techniques in Deep Gaussian Process and Model order reduction techniques. The human heat map will be embedded into active energy control models for the building (building on top of BIM), with the aim of building a carbon neutral building of the future. 'RIBA to Reality' is uniquely centred around two university buildings as real world test-bed. The Living Systems Institute which is built and in operation will provide real world data to trial and test the new technology and methods proposed, showing how digital planning assets can be aggregated to create an operational digital twin. Project North Park a 70M visionary capital investment, with the ambition to be carbon neutral over its life time, is in early stages of design. This programme will explore how the new technology can then be used to support design decisions at critical stages, in particular looking at how the building could exceed its already ambitious energy targets by taking into account its wider footprint. Recent Updates An Android app is ready to download. It can currently record phone sensor measurements, including GPS location, WiFi signal strengths, magnetometer readings and acceleration data.Data is being collected using the app along a stretch of road at the University.Large methodological studies are being carried out with the collected data using state-of-the-art machine learning algorithms to predict a user's GPS location from only their recorded WiFi signal strengths.The development of an interface between the LSI building's management system and the heat map of live building usage is underway. Collaborators Professor Tim Dodwell, Academic Principle Investigator, https://emps.exeter.ac.uk/engineering/staff/td336Professor Richard Everson, Co-Investigator, http://emps.exeter.ac.uk/computer-science/staff/reversonProfessor Jonathan Fieldsend, Co-Investigator, http://emps.exeter.ac.uk/computer-science/staff/jefieldsDr Matt Eames, Co-Investigator, https://emps.exeter.ac.uk/engineering/staff/py99meeMr Laurence Oakes-Ash, Industrial Principle Investigator, City Science, https://www.cityscience.com/team/ Partners

1594728000000

Analysis and Design for Accelerated Production and Tailoring of composites (ADAPT)

Start Date 01/09/16 - Finish Date 31/12/20 Establishing novel manufacturing techniques that speed up deposition of stiffness tailored This project is developing novel manufacturing techniques to ensure delivery of better products by minimising occurrence of manufacturing defects. The project will enable production of high performance composite components at rates suitable for the next generation of short-range aircraft. If demand for production of next-generation, short-range commercial aircraft is to be profitably met, current methods for composite airframe manufacture must achieve significant increases in material deposition rates at reduced cost. However, improved rates cannot come at the expense of safety or increased airframe mass. This project will enable a fourfold increase in productivity by establishing novel manufacturing techniques that speed up deposition of stiffness tailored material. New continuum mechanics-based forming models will ensure delivery of better products by minimising occurrence of manufacturing defects. In a parallel stream of activity, new methodologies for analysis and design of composite structures in which the ply angle and thickness of fibre-reinforcement is spatially tailored, both continuously and discretely, will reduce the need for stiffening, leading to significant savings in structural mass (by up to 30%) and manufacturing cost (by up to 20%). Potential structural integrity and damage tolerance issues, such as transition in fibre angle and tapering of laminate thickness from one discrete angle to another, will be addressed. Recent Updates A new method for determining the formability of a stack of layers of different fibre orientations has been devised. Collaborators Professor Tim Dodwell, Academic Principle Investigator, https://emps.exeter.ac.uk/engineering/staff/td336Professor Richard Butler, https://researchportal.bath.ac.uk/en/persons/richard-butler-2Dr Andrew Rhead, https://researchportal.bath.ac.uk/en/persons/andrew-rhead Partners

1594413056000

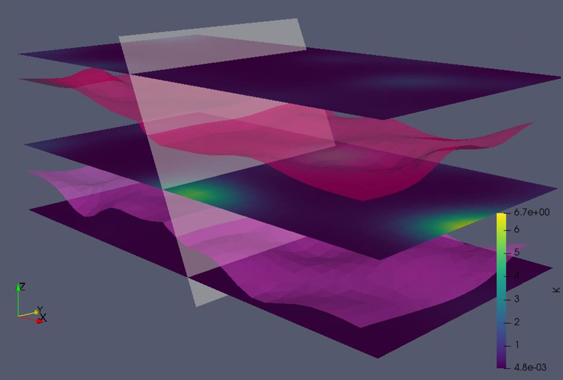

Multi-Level Inversion and Uncertainty Quantification for Groundwater Flow and Transport Modelling

Start Date 24/09/18 - Finish Date 23/09/22 Investigating cutting edge multi-level Markov Chain Monte Carlo methods and machine learning techniques to improve inversion and uncertainty quantification in hydrogeology The problem being addressed is the prohibitive computational cost of doing standard MCMC on high-dimensional distributions with expensive forwards models, such as groundwater flow and transport problems. One solution is the use of surrogate models in multi-level MCMC model hierarchies, and we are currently exploring various machine learning techniques for ultra-fast approximation of model response. The work has applications in both groundwater abstraction and remediation – improved estimates of groundwater flow patterns can improve decision support systems, allowing groundwater abstraction companies to make better sustainable yield estimates, and remediation companies to design taylor-made remediation campaigns. Inversion of distributed environmental models is typically an ill-posed problem with noisy data. This is a well known challenge that has been addressed from various angles throughout the previous half century. Markov Chain Monte Carlo (MCMC) overcomes the ill-posedness by treating models as realisations of a stochastic process, allowing for rigorous uncertainty quantification. However, this power oftentimes comes at a prohibitive computational cost, since MCMC requires the forward model to be evaluated many times and since, in case of groundwater flow and potentially an advection/diffusion process, forward model evaluation involves solving a Partial Differential Equation (PDE). Delayed Acceptance or Multi-Level MCMC alleviates some of the model evaluation cost by introducing a model hierarchy, in which an inexpensive reduced-order or surrogate model is employed as a filter by rejecting highly unlikely parameter sets. Hence, a carefully chosen reduced-order model can accelerate MCMC significantly. Emerging machine learning techniques provide ultra-fast predictions for non-linear problems if tuned correctly and may thus deliver previously unimaginable improvements to multi-level MCMC. This project is about identifying promising reduced-order models and multi-level MCMC techniques that would allow fast inversion and uncertainty quantification of hydrogeological problems. Recent Updates We are currently working on a journal paper describing the use of a Deep Neural Network as a surrogate model. The underlying code makes use of the high-performance PDE solver FEniCS along with the popular neural network framework Keras and an in-house MCMC code. Collaborators Professor Tim Dodwell, Academic Principle Investigator, https://emps.exeter.ac.uk/engineering/staff/td336Dr David Moxey, PhD Supervisor, https://emps.exeter.ac.uk/engineering/staff/dm550

1594411994000

Uncertainty Quantification for Black Box Models

Start Date 01/04/19 - Finish Date 01/03/22 Using statistical uncertainty quantification methods for computationally-expensive engineering problems. Some PDE-based models in engineering are extremely challenging and expensive to solve, and are often characterised by high-dimensional input and output spaces. We aim to tailor existing methods from the emulation and calibration literature to such problems, providing full-field predictions of the model output at a fraction of the cost of solving the model directly. With the ability to predict the full model output (with uncertainty) at a particular setting of the input parameters via an emulator, such an emulator can be used as a proxy for the true model in many applications that are currently hindered by a high computational cost. For example, in problems requiring domain decomposition, where the model is solved on a large number of subdomains, an emulator proxy can be used in place of the exact solution on every subdomain, with full solutions (unobtainable or extremely expensive directly) constructed from the individual emulators, incorporating the correlation structure between the different subdomains. How best to include this structure is an area of ongoing research within this project. Recent Updates Coming Soon Collaborators Professor Tim Dodwell, Academic Principle Investigator, https://emps.exeter.ac.uk/engineering/staff/td336Peter Challenor, http://emps.exeter.ac.uk/mathematics/staff/pgc202Danny Williamson, https://emps.exeter.ac.uk/mathematics/staff/dw356

1594410761000

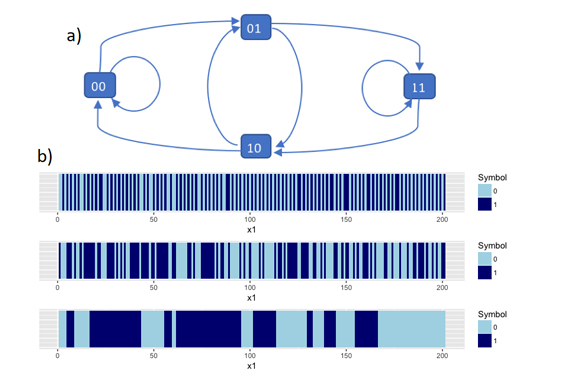

Spatially Correlated Bernoulli Processes

Start Date 01/08/20 - Finish Date 01/08/22 Funding. UKRI 5 Year Turing AI Fellowship ∼£2.6M Spatially correlated Bernoulli processes using de Bruijn graphs. I aim to develop a spatially correlated Bernoulli process in n dimensions so that samples from this process have a clustered structure instead of appearing at random. Initial work on a 1-d process (during my PhD) has focused on using de Bruijn graphs, which are somewhat of a generalisation of Markov chains, so that we can control the spread of the correlation. I initially want to extend these ideas to higher dimensions, and then focus on possible applications, for example in classification. Given a series of Bernoulli trials (or coin tosses) in ‘space’ it is often the case that the successes in nearby trials are more highly correlated than trials far away. We then want to model spatially varying probabilities of success so that the probability of getting a 1 is higher near another 1 (or equally for 0’s). Often such processes are modelled using a logistic regression, which allow the probability of observing a 1 to vary smoothly in space, producing a marginal Bernoulli distribution with probability of success at each point. If we were to sample a sequence of 0’s and 1’s from these models, we actually neglect any form of spatial correlation, and hence there is a much higher chance for the 0’s and 1’s to appear random in the sample. An example of this is seen in computer modelling or spatial classification. Consider a two-dimensional grid where the input space is split into two separate regions. We can fit a logistic regression to these regions, which gives the probability of being classified into one of the two regions at any point in space. To generate classification predictions, we would sample from an independent Bernoulli distribution at specified input values, where we would expect input points which are closer together to be more likely to be classified into the same region. Drawing marginally, however, gives a much higher chance of misclassifications, especially close to the region boundaries. We therefore want to produce a correlated Bernoulli process, and recent work has focused on using the structures in de Bruijn graphs. Given the symbols 0 and 1, a length m de Bruijn graph is a directed graph where the nodes consist of all the possible length m sequences of 0’s and 1’s. Edges are drawn between nodes so that the end of the previous node is the same as the start of the next. Since probabilities can be attached to each edge to show the probability of transitioning from sequence to sequence, de Bruijn graphs can be seen as a generalisation of a Markov chain. The spread of the correlation can be controlled by changing both the size of the node sequences and the transition probabilities themselves. This means that we can create both ‘sticky’ (clustered) sequences and ‘anti-sticky’ (alternating) sequences of 0’s and 1’s. I have worked on producing both a run length distribution and inference. Given a sequence of 0’s and 1’s, we can estimate the de Bruijn process that was most likely used to generate it. This work has been successful at defining a correlated Bernoulli process in one dimension, ands the next step is to extend this to higher dimensions. This is a difficult problem since the direction associated with de Bruijn graphs does not easily apply to a spatial grid. Initial work has focused on adapting multivariate Markov chains, and/or redefining the form of the dependent de Bruijn sequences. Due to the directional restrictions on a spatial grid, I will also develop a more generalised non-directional de Bruijn process. Recent Updates PhD Thesis (Submitted) 09/04/20University of Exeter Internal Seminar 02/06/20Isaac Newton Institute Talk 07/19 Collaborators Professor Tim Dodwell, Academic Principle Investigator, https://emps.exeter.ac.uk/engineering/staff/td336Prof Peter Challenor, PhD Supervisor, https://emps.exeter.ac.uk/mathematics/staff/pgc202Prof Henry Wynn (LSE), http://www.lse.ac.uk/CATS/People/Henry-Wynn-homepageDr Daniel Williamson, https://emps.exeter.ac.uk/mathematics/staff/dw356 Partners

1594296000000

A digital twin of the world’s first 3D printed steel bridge

Start Date 01/08/20 - Finish Date 01/08/22 Measuring, monitoring, and analysing the performance of the world’s largest 3D printed metal structure: a 12 metre-long stainless steel bridge due to be installed across a canal in Amsterdam in 2018. Researchers are measuring, monitoring, and analysing the performance of the world’s largest 3D printed metal structure: a 12 metre-long stainless steel bridge. Data from a sensor network installed on the bridge is being inputted into a ‘digital twin’ of the bridge which acts as a living computer model that imitates the physical bridge with growing accuracy in real time as the data comes in. In partnership with MX3D, a 3D printing company, researchers on this project are measuring, monitoring, and analysing the performance of the world’s largest 3D printed metal structure: a 12 metre-long stainless steel bridge due to be installed across a canal in Amsterdam in 2018. A sensor network has been installed on the bridge by a team of structural engineers, mathematicians, computer scientists, and statisticians from the Turing and the University of Cambridge’s Centre for Smart Infrastructure and Construction. The data from the sensors is being inputted into a ‘digital twin’ of the bridge, a living computer model which will imitate the physical bridge with growing accuracy in real time as the data comes in. Recent Updates Coming Soon Collaborators Professor Tim Dodwell, Academic Principle Investigator, https://emps.exeter.ac.uk/engineering/staff/td336Professor Mark Girolami, https://www.christs.cam.ac.uk/person/professor-mark-girolamiProfessor Leroy Gardner, https://www.imperial.ac.uk/people/leroy.gardnerDr Craig Buchanan, https://www.imperial.ac.uk/people/craig.buchananGijs Van Der Velden, https://www.linkedin.com/in/gijs-van-der-velden-2b795112/Dr Mohammed Elshafie, http://www.eng.cam.ac.uk/profiles/me254Dr Din-Houn Lau, https://www.imperial.ac.uk/people/dhlDr Pinelopi Kyvelou, https://www.imperial.ac.uk/people/pinelopi.kyvelou11 Partners